If your career is just starting or hasn't followed a traditional path, don't let that stop you from considering Twilio. While having “desired” qualifications make for a strong candidate, we encourage applicants with alternative experiences to also apply. Twilio values diverse experiences in other industries, and we encourage everyone who meets the required qualifications to apply.

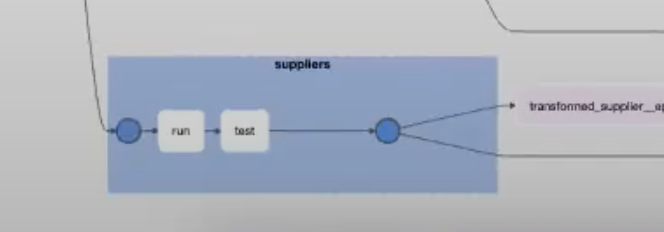

Not all applicants will have skills that match a job description exactly. Responsibilitiesīuild and maintain robust and reliable internal data pipelines, which generate marketing datasets and power key metrics reporting and dashboards on topics such as marketing spend, campaign attribution, lead acquisition etcĭevelop and deliver data modeling and predictive analytics solutions to Marketing and Sales stakeholdersĪnalyze marketing performance results to generate business insights, inform marketing strategy, and unlock potential valueīe part of a team of data scientists who work across the Go-to-Market functions, while collaborating with cross-functional teams including Data Engineering, Paid Marketing, Marketing Strategy and Operations, and Sales Reporting to the GTM Data Science & Analytics Lead, this position will be working closely with various marketing partners to jointly drive improvements not just at the top-of-funnel, but across our sales motion and marketing landscape. This position is needed to accelerate the growth and efficiency of our marketing efforts. At Twilio, we support diversity, equity & inclusion wherever we do business. We're on a journey to becoming a global company that actively opposes racism and all forms of oppression and bias. Twilio powers real-time business communications and data solutions that help companies and developers worldwide build better applications and customer experiences.Īlthough we're headquartered in San Francisco, we have presence throughout South America, Europe, Asia and Australia. Typically this can be used for when you need to combine the dbt command with another task in the same operators, for example running dbt docs and uploading the docs to somewhere they can be served from.Join the team as our next Senior Data Scientist, Marketing on Twilio’s Segment product team. Typically you will want to use the DbtRunOperator, followed by the DbtTestOperator, as shown earlier. If set to True, passes -warn-error argument to dbt command and will treat warnings as errors.The operator will log verbosely to the Airflow logs.Defaults to dbt, so assumes it's on your PATH If set, passed as the -selector argument to the dbt command.If set, passed as the -select argument to the dbt command.If set, passed as the -exclude argument to the dbt command.If set, passed as the -models argument to the dbt command.Should be set as a Python dictionary, as will be passed to the dbt command as YAML If set, passed as the -vars argument to the dbt command.The directory to run the dbt command in.If set, passed as the -target argument to the dbt command.If set, passed as the -profiles-dir argument to the dbt command.If set as a kwarg dict, passed the given environment variables as the arguments to the dbt task.There are five operators currently implemented:Įach of the above operators accept the following arguments: It will also need access to the dbt CLI, which should either be on your PATH or can be set with the dbt_bin argument in each operator.

0 kommentar(er)

0 kommentar(er)